This post was originally part of my series on Python for Feature Film on my personal site, but is being ported here with minor changes. The dates have been adjusted to match release dates for the projects.

In Part 8 of my series on Python for Feature Film, I’ll focus on Smallfoot. I was one of two pipeline supervisors on this show along with Ryan.

Together we pushed this absolute monster of a show through to the finish line. It was also the last movie I worked on before leaving the film industry to join tech.

Challenges on SmallFoot

In the world of Smallfoot there’s a few common issues:

- There’s a lot of everything. From Yeti’s, to buildings, to trees. There’s a lot more than any show I’ve worked on.

- A lot of stuff is really big. The characters are massive, the world is massive to accommodate. Big.

- A lot of stuff has hair. Hair is expensive and hard to do.

- Everyone’s working in parallel

Additionally, our entire pipeline crew was new to the company. They were great, but having an entire crew that has never seen our pipeline before is a daunting challenge. Other shows like Spider-Verse had absorbed the other experienced TDs, so Ryan and I had to do our best to onboard a large crew of TD’s. Hopefully we did a good job as mentors, but the new TD’s definitely did well by themselves too.

Okay so let’s get through each of those in order!

Can’t see the forest for the trees

Smallfoot takes place in three areas. The mountain top, the forest and the city.

The mountain top was relatively easy, but the forest and city posed some complex issues in scene management. Each of those scenes just had too many objects for scene graphs to process material assignments on.

Even though we used instancing, each tree was instanced at the pine needle level. This was fine for actually loading the geometry in to the scene, but it was impossible for a scene graph to traverse the depth of these hierarchies to assign materials etc…

At least in a reasonable time and memory budget. We were seeing frames that took a minute to render but took over 6 hours to process the scene graph before the render could even start

Ryan had the brilliant idea to flatten the hierarchies and point instance them around. Flat hierarchies are terrible for artists to work with at scale, but we set it up so it would auto process the environments on changes to it, and then lighters would just pull in the flattened env.

This is quite simple to do with the scene and Alembic APIs. You iterate through the scene and start building an Alembic point instanced scene with every asset you find. Of course, we only did this for static elements, but it meant we processed the environment once (still six + hours) but then didn’t pay that cost again in the future.

This brought down our scene processing times from 6 hours to 8 minutes. But there was a catch…

The system we used relied heavily on string processing to do its thing. On some shots this put us at over 300GB of memory used to even start processing a scene, which meant our machines would kill the task before they could finish.

So I sat down and optimized the algorithm to be significantly more efficient. This was all in C++, but truthfully could have been done in Python too. The shot that was taking over 300GB and several hours to process was now down to 20GB of memory and ran in 15 minutes. Not bad.

Big Stuff

Truthfully scale of the characters wasn’t a big issue on this show but what we did hit was the scale of the data being generated.

Between the number of crowds, the hair caches, and one particular rig, we were eating through disk space like no-ones business.

So we tackled this one by one:

- It’s easy to write a Python script that iterates through each shot and finds crowd characters and hair caches that are no longer in use. Traditionally we’d keep these around since disk space is cheap, but this show was really pushing it.

- For the giant rig, we realized that the rig was mostly transform based animation. Alembic caches let you write things as deforming vertices or transforming objects. Unfortunately, at some point the rig had been set to write as vertex caches. One of our TD’s wrote some heuristics to determine when this wasn’t necessary and saved hundreds of gigabytes per publish, and several hours per publish as well.

Hair, Hair, Everywhere

Every character in this show has hair, and we just didn’t have the man hours to dedicate Character Effects artists to each and every shot, and keep them waiting on renders.

Our solution was to build a post animation publish task that would create hair simulations automatically.

After every animation publish, a hair simulation would be started and then a render from this simulation. Once it was done, we would be able to review these, and assign only the characters that needed it out.

It also saved time for those characters because an artist would know what the default settings looked like, and could tweak from there.

Hair is also really expensive to render, store and simulate. So we built systems that would lower the density and quality of the hair based on the distance to camera. A hair level of detail system.

New to the Pipeline

So like I said, our team had 9 new hires to the studio, and we really had to help show them the ropes.

There’s not much to say here, than to give some advice:

- Be patient

- Write out every task with clear goals. Don’t rely on just discussing it.

- Meet regularly with each of the team to see how they’re doing.

- Try and make sure they’re not doing too much overtime, and keep them from ghosting.

- Communicate in team chats so everyone is aware of what’s going on.

- Do team lunches to build camaraderie. A friendly team is one that will learn from each other better.

Since many readers here will also be new to a studios pipeline, here are a few images to show the flow of a shot.

Race Conditions

Pipelines, much like anything that happen in parallel, can suffer from Race Conditions.

One of the biggest here was that late in the production, the Environment Modeling team changed all the base trees out from the shot layout folks who had been moving hundreds of trees to suit each shot’s needs. This caused a dramatic conflict in our data as to which tree was the one we wanted.

Usually this isn’t a big deal, because we can lock versions pretty easily and give precedence to a particular teams decisions. However in this instance, we were facing issues:

- The leadership wanted to see all the changes from the environment modeling team, so we couldn’t lock the version down to one without a conflict

- Our system for giving precedence to a teams opinion is based on path names, but names had changed which means we’d not be able to match them up.

- Additionally, not only had the names changed, but positions had too. With tens of thousands of trees, efficiently matching them was a struggle.

Fixing this could take weeks if an artist was to do it manually, which is not ideal when they still have other shots to finish. Enter the k-d tree.

Since we had to find a way to resolve these two conflicts, the way forward was to try and find a match for every original tree against the closest tree in the new environment.

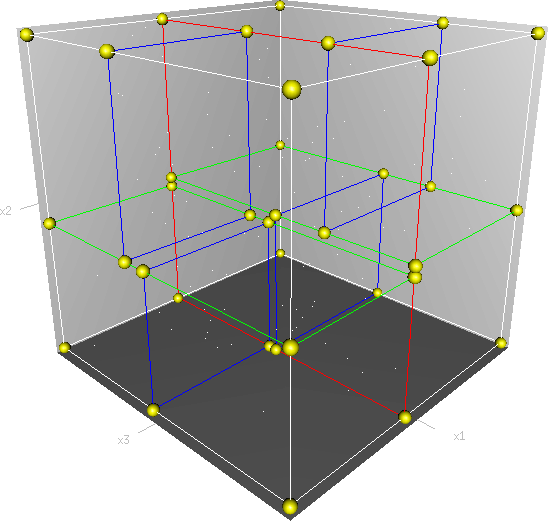

Simply doing a search for the nearest point by iterating over all the trees would be wildly inefficient. I had the idea to use a k-d tree which is a way to split up the space into multiple partitions. We populate the k-d tree with a list of each trees points in the new environment, and then for each tree in the old environment, we look up the nearest point.

Due to the space partitioning of this, it’s relatively efficient to find something the nearest point that matches. Each time we find one, we eliminate it from future searches, thereby reducing the search time of each subsequent tree.

To further reduce the search time, I also made use of other heuristics like shared path names in the environment. I would try and go down the environment hierarchy on both the old and the new environment, till they diverged. Usually this happened at subsections of the environment making for a much smaller portion of trees to process.

Whenever the names diverged, I’d process over them with the k-d tree, and find the best match pretty quickly.

What would have been several hours per shot to do manually, was now only a few minutes with a very low probability of error.