This post was originally part of my series on Python for Feature Film on my personal site, but is being ported here with minor changes. The dates have been adjusted to match release dates for the projects.

Python is a programming language that has become integral to the movie making process over the last few years. There’s rarely an animated feature or visual effects film, if any, that hasn’t had Python play a large part in getting it to the screen

When people think about movies, even programmers often think about the artistry involved in bringing those images to life. However, the technical side of the film industry is something that often goes unnoticed outside a small group of people.

To that end, I’ve written a few blog posts about how I’ve used Python on several major films that I’ve been lucky enough to work on. Hopefully this shows how much it contributes to the entire life of a movie.

I’ve also recently released a course on Udemy to teach artists how to learn Python For Maya since it’s becoming an increasingly valuable skill in the industry. These blog posts serve as companion material to the course as well.

With that intro out of the way let’s continue…

What is Python?

Some of you may not be familiar with Python.

Python is a programming language designed to be very easy to read and write. It’s incredibly popular in the feature film industry as well as other groups, like mathematics, science and machine learning.

You can learn more about Python on the official website.

It’s important to note that today, the film industry is largely still tied to Python 2.7. There is a concerted effort in 2020 to move over to Python 3, and we should start seeing larger Python 3 adoption starting in 2021 and moving forward.

The Feature Film Pipeline

The biggest use of Python is in our feature film pipeline.

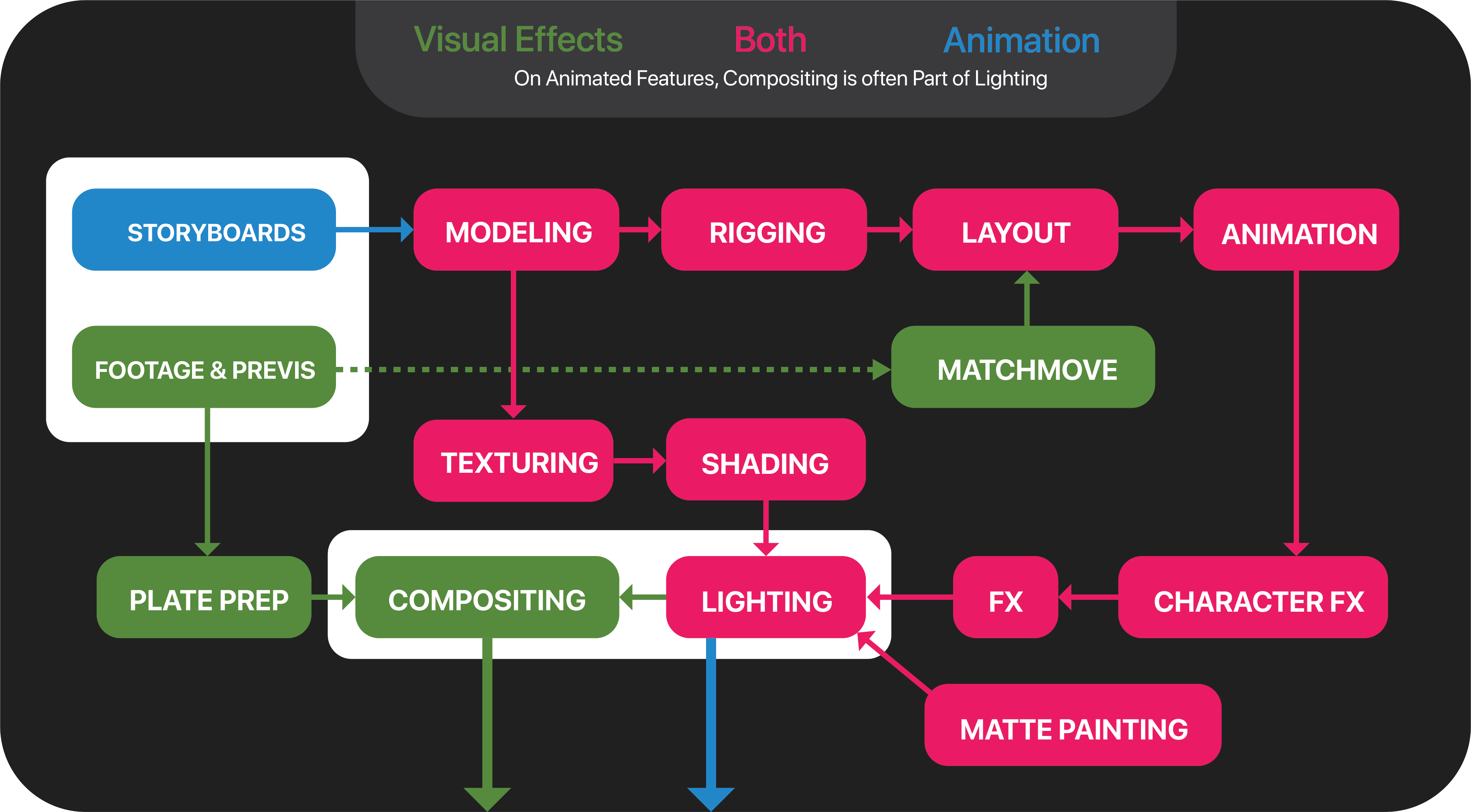

This is an image that describes the pipeline at most major studios. The Pipeline is the arrows that link each department together. It’s responsible for making sure that data flows between each department and that everyone can play well together. It’s also responsible for the toolsets in each department so that the artists themselves can work efficiently, but for now lets focus on the inter-department flow.

Here you can see the various stages of a visual effects film or animated feature. Studios may differ slightly from this, but it’s the general workflow.

The storyboards/footage/previs represent the data we get, and Compositing/Lighting are the last stages of the film for us. Visual Effects Films differ slightly from animated films because you have to deal with the extra added element of film footage in the form of plates.

The Pipeline is responsible for getting the data between departments. Here’s the gist of how it works (though it is more organic a process than the one described):

- We get data from the client or story artists in form of plates, previsualization (previs) or storyboards that tell us what is happening in the scene.

- Modeling look at all of this and generate 3D models of all the assets that will be required.

- Rigging take the modelled assets for characters and apply a virtual skeleton to make it animatable.

- Matchmove are in charge of creating virtual cameras that match the ones used to shoot the film as well as any standin characters or geometry

- Layout take the rigs, and either create their own cameras or take matchmoves cameras and set up the scene. They’re the equivalent of a virtual director of photography.

- The scene is then handed off to Animation, who are the equivalent of the actors. They are in charge of the movement of the characters, and bring the inanimate skeletons to life.

- CharacterFX are in charge of all the technical parts of animation. Muscle simulations, making cloth move realistically, the hair, grass etc… all comes from CharacterFX.

- FX then handle the non animation related effects. Whether it be destruction, fire, voxelization, etc… there’s a lot that FX is in charge of.

- While this is happening, Texturing are in charge of giving color to the 3D Assets so they aren’t just grey objects.

- Shading then takes these textures and gives the assets a material that tells it how light should interact with it.

- Matte Painting are the department we use when it is not logical or feasible to build an environment. We realistically can only build so much, and past that point it’s more efficient to have someone make a very high quality painting instead.

- This all gets funnelled into Lighting who are in charge of adding lights to the shot and rendering the image out. They also do a little bit of compositing to sweeten the image. On an animated feature this may be the end of the show.

- On a visual effects show, we have to prepare the plates by either removing unwanted elements, removing noise or removing the lens warp. This is handled by Plate Prep,also known as RotoPaint.

- Finally everything goes to Compositing who take all the images and integrate our CG renders into the actual film plate. On a visual effects show, this will be the last stage.

We use Python to tie all these bits together. In the next section I’ll go over publishing before moving onto describing how Python is used for the individual departments.

Publishing and Asset Management

This is pretty much the core of traditional pipeline, to make sure assets can be shared between departments and be tracked.

The first part of this is Asset Publishing.

When a department deems it’s work ready, it decides to publish it so the next department in the chain can consume it. For example modeling exports models, but does animation export animation? Well that depends on who is consuming it. This is dependent on whether it needs to be interactive past this point or not.

For geometry we often just publish as a geometry cache using Alembic which is a industry standard developed by Imageworks and ILM so that we can have a consistent cache format. For point cloud data, we either use Alembic or OpenVDB, for images we tend to use tiff’s or OpenEXR. Soon the industry will probably also standardize on a universal scene format from Pixar called OpenUSD.

Anyway the idea is to generally keep the data in the most efficient format possible, while allowing for easy interchange. Cache data is often best because you really only take an IO hit, which is cheap, versus a deformation hit, which can be very expensive.

This all gets really complex though. Artists don’t need to know where their data is coming from or going to, or even how it gets there. They just need to be able to load it in and publish it out.

This is the pipeline. We develop the UI’s and the tools to do this in a very user friendly manner.

To publish data, the user just has to open a publish UI that will validate their assets against some tests, and then send it off to the machine farms where the conversion to one of the open formats happens.

To ingest the published data, we similarly just have an asset browser that lets the artist pick which asset they want. Often they just see a thumbnail and description. The details are irrelevant to most artists.

Because these publishing and asset management systems need to be common to multiple apps, we develop them in Python and Qt (PyQt or PySide) , which allows us to reuse our code without recompiling for each application and makes it easy to rapidly add functionality where needed.

This is common to pretty much every department, so I figure it warrants its own section here rather than repeating for each.

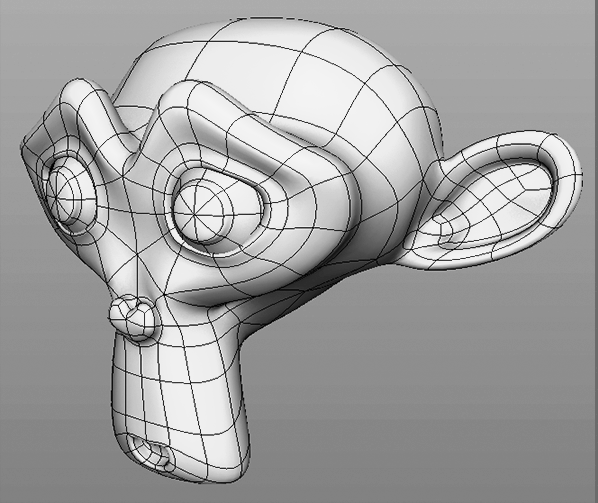

Modeling

Modeling is the department in charge of creating the 3D source geometry used by every other department. Often there are quite a few repetitive tasks involved in regards to placing or editing geometry, or even just managing the scene.

This is where Python comes in handy. Most 3D packages have a Python API that lets you do program everything that you would do manually.

So instead of spending 10minutes creating a simple asset, you can script it up and then just click a button when you need it next time. That 10 minutes saved really adds up, and over the course of a project you may be saving hundreds of hours that could be better focused on building more complex assets that require artistic input.

For example, in my course Python For Maya I go over creating a simple gear using Python as well as creating a simple UI so that you can specify how many teeth the gear has and how long they will be.

This can get more complex though with using Python to create custom deformers or interactive tools, like this demo from Hans Godard demonstrates.

Rigging

Rigging’s job is to create a skeleton for the character geometry so that it can deform, just like a real human would.

Of course that’s an over simplification. Rigging is also essentially creating the interface that animators use to bring these creatures to life, but they also have to make sure that any movement happens convincingly. If an animator moves the arm, the rigger must make sure that the shoulders deform properly.

Python plays an integral role in Rigging. Here are just some of the uses:

- Creating automated builds of rigs. Rather than doing everything manually, rigs can be composed using code which makes them easy to reuse.

- Developing custom deformers or nodes to perform operations not native to the application.

- Develop supporting scripts for animators to switch between modes or controls etc..

In my course Python For Maya I go over creating a controller library. Controllers are, as the name suggests, the objects animators use to control the rig. Instead of using the geometry directly, they use controls, that move the skeleton, that in turn deforms the geometry.

I go over developing a user interface with Qt to save out and import the controllers for easy reuse.

Animation

Animation is the department most people would be familiar with. They take the static rigs and give them motion, and make them emote. They’re essentially the virtual actors.

But as you can imagine, there’s a lot of repetitive actions that animators have to do that can also be scripted away using Python, or just made easier.

Examples include:

- Picking controllers. A complex rig may have hundreds of controls that clutter the view. A picker interface lets animators have a friendly UI to select the controls while hiding them in their actual view.

- Creating keys, specifically inbetweens. In the age of 2D, animators would define in between keys (the keys that give motion between poses) as a weighting towards a pose. In 3D we can have tools that help the animator say they want to weight the new key 30% of the way to the old key.

- Setting up constraints. Characters will pick up objects, and at that point animators must constrain the object and their character together so they move as one. This can be made easier with tools that handle the management of this common task.

We develop most of our tools to do this kind of thing using Python and most of our user interfaces using Qt, via either PyQt or Pyside.

In my course Python For Maya I go over creating a an animation tweener Ui, where an animator can drag a slider to choose the weighting of their keys between two poses.

Character FX

Now that Animation is done animating the character, we still have to figure out the muscles, hair and cloth that are more technical considerations but add a lot of depth to the scene.

From flowing dresses in Tangled to crazy hair in Hotel Transylvania 2 or muscle simulations in Suicide Squad, these are all handled by the Character FX department.

Like the other departments they too have a lot of use for Python.

For example:

- Setting up their simulations

- Combining multiple takes of simulations together

- Creating brand new procedural workflows.

- Often overlooked, Character FX is that very important step in getting really lifelike images on screen.

FX

A completely different department to Character FX, this FX department is in charge of more procedural effects.

Destroying buildings, explosions, magical particles or even entire oceans. This is driven by the Effects department.

They too use Python for many things including setting up their procedural effects graphs and scripting parameters that would otherwise be time consuming to do by hand.

Python can even be used to create entire procedural nodes that generate interesting effects. It’s very powerful.

Lighting

Now that all this 3D geometry has been created, we need to convert it to images that can be displayed on screen, but if we were to just do this, it would be black.

Lighting are in charge of adding the lights to the scene and making it look interesting as cinema. They are the ones who can set the mood of the shot, make it dark and menacing or happy and vibrant even if nothing is happening.

Even here, Python can be incredibly useful because a scene may have many, many lights and many, many 3D assets. Here, we can make UI’s that help make these scenes manageable.

What can often be a scene with billions, or even trillions, of objects, can be distilled down to simple user interfaces.

In my course Python For Maya I go over the creation of a lighting manager using PyMel and Qt to generate a UI that lets us control all the lights in the scene. It also goes over importing and exporting them as JSON files, so that they can be shared among shots and artists.

Once the lighting is done, it is time to convert the scenes to images. This is done using a render like Arnold, Renderman or Vray, among many others.

Compositing

Finally, there is Compositing.

Here we take the final footage and any rendered elements, if any, and combine them together. It’s more than just combining, because you need to make sure everything integrates well and feels like it’s all one cohesive image.

People often think of visual effects as over the top explosions and creatures, but it can be even subtle things like background replacements, or adding crowds.

Here too, Python is incredibly useful.

It can be used to automate setting up the compositing node graph in an appliication like Nuke, but it can also be used to do entire image processing operations itself using libraries like OpenColorIO or Pillow.

We can even use Python for Computer Vision to help track objects inside the scene. The possibilities are really quite exciting.

Final Words

I hope this blog post has been a good look into how programming can be such a vital part of bringing moving to life nowadays, and more specifically, how Python itself is so useful.

It makes sure that we can quickly make very complex relationships between all these moving pieces simpler, and also makes it so each department can work more efficiently.

It’s often overlooked since it’s not an artistic part of the process and you rarely see the direct results of it on screen, but nevertheless, it’s a skiill that is increasingly valuable in getting these increasingly complex movies to the big screen.

- Header image uses template from GraphicsFuel.

- Header image contains Blender Racing Car scene by Pokedstudio.